Table of contents

Subscribe to DVS 2024

Sign up now to get access to the library of members-only issues.

Navigating the Future: Your Guide to Ethical and Responsible AI

Navigating the Future: Your Guide to Ethical and Responsible AI

Navigating the Future: Your Guide to Ethical and Responsible AI

Insights & Trends

Insights & Trends

7 minutes

7 minutes

Oct 4, 2024

Oct 4, 2024

Ethical AI is essential for sustainable business and societal trust. To ensure long-term success, organizations must prioritize fairness, transparency, and accountability in their AI systems, safeguarding against risks like bias and privacy violations.

Governance and regulation are critical in shaping the future of AI. While frameworks like the EU’s AI Act are setting the standard, global collaboration is necessary to ensure AI development aligns with ethical principles across industries and regions.

Join industry leaders at the Digital Visionaries Symposium 2024 on October 24, 2024, for the panel – “Navigating the Future of AI Driven Financial Ecosystems”. This panel will cover the development of ethical guidelines, the role of regulation, and how organizations can implement these practices to ensure AI is used for the greater good.

Ethical AI is essential for sustainable business and societal trust. To ensure long-term success, organizations must prioritize fairness, transparency, and accountability in their AI systems, safeguarding against risks like bias and privacy violations.

Governance and regulation are critical in shaping the future of AI. While frameworks like the EU’s AI Act are setting the standard, global collaboration is necessary to ensure AI development aligns with ethical principles across industries and regions.

Join industry leaders at the Digital Visionaries Symposium 2024 on October 24, 2024, for the panel – “Navigating the Future of AI Driven Financial Ecosystems”. This panel will cover the development of ethical guidelines, the role of regulation, and how organizations can implement these practices to ensure AI is used for the greater good.

Ethical AI is essential for sustainable business and societal trust. To ensure long-term success, organizations must prioritize fairness, transparency, and accountability in their AI systems, safeguarding against risks like bias and privacy violations.

Governance and regulation are critical in shaping the future of AI. While frameworks like the EU’s AI Act are setting the standard, global collaboration is necessary to ensure AI development aligns with ethical principles across industries and regions.

Join industry leaders at the Digital Visionaries Symposium 2024 on October 24, 2024, for the panel – “Navigating the Future of AI Driven Financial Ecosystems”. This panel will cover the development of ethical guidelines, the role of regulation, and how organizations can implement these practices to ensure AI is used for the greater good.

As artificial intelligence (AI) continues to transform industries from healthcare to finance. Its impact on productivity could add trillions of dollars in value to the global economy. The need for responsible and ethical AI governance has never been more pressing. While AI presents immense potential for innovation and societal improvement, it also brings risks that require careful management.

This insight examines how ethical AI frameworks are developing, the regulatory landscape, and how organizations can implement AI responsibly to benefit both society and business.

As artificial intelligence (AI) continues to transform industries from healthcare to finance. Its impact on productivity could add trillions of dollars in value to the global economy. The need for responsible and ethical AI governance has never been more pressing. While AI presents immense potential for innovation and societal improvement, it also brings risks that require careful management.

This insight examines how ethical AI frameworks are developing, the regulatory landscape, and how organizations can implement AI responsibly to benefit both society and business.

As artificial intelligence (AI) continues to transform industries from healthcare to finance. Its impact on productivity could add trillions of dollars in value to the global economy. The need for responsible and ethical AI governance has never been more pressing. While AI presents immense potential for innovation and societal improvement, it also brings risks that require careful management.

This insight examines how ethical AI frameworks are developing, the regulatory landscape, and how organizations can implement AI responsibly to benefit both society and business.

Why Ethical AI is Crucial for Organizations and Society

Why Ethical AI is Crucial for Organizations and Society

Why Ethical AI is Crucial for Organizations and Society

AI is increasingly influencing decision-making processes. However, these advancements bring risks such as algorithmic bias, data privacy violations etc. As AI becomes increasingly integrated into everyday life, ethical AI is essential for safeguarding individual rights and for ensuring long-term business sustainability.

Ethical AI seeks to address these issues by promoting fairness, transparency, and accountability in AI systems. According to UNESCO and the World Economic Forum, responsible AI can enhance trust, reduce bias, and ensure that technology benefits society as a whole. Institutional investors and policymakers have a critical role in promoting ethical AI as part of Environmental, Social, and Governance (ESG) initiatives, ensuring that AI is used to advance positive societal outcomes.

AI is increasingly influencing decision-making processes. However, these advancements bring risks such as algorithmic bias, data privacy violations etc. As AI becomes increasingly integrated into everyday life, ethical AI is essential for safeguarding individual rights and for ensuring long-term business sustainability.

Ethical AI seeks to address these issues by promoting fairness, transparency, and accountability in AI systems. According to UNESCO and the World Economic Forum, responsible AI can enhance trust, reduce bias, and ensure that technology benefits society as a whole. Institutional investors and policymakers have a critical role in promoting ethical AI as part of Environmental, Social, and Governance (ESG) initiatives, ensuring that AI is used to advance positive societal outcomes.

AI is increasingly influencing decision-making processes. However, these advancements bring risks such as algorithmic bias, data privacy violations etc. As AI becomes increasingly integrated into everyday life, ethical AI is essential for safeguarding individual rights and for ensuring long-term business sustainability.

Ethical AI seeks to address these issues by promoting fairness, transparency, and accountability in AI systems. According to UNESCO and the World Economic Forum, responsible AI can enhance trust, reduce bias, and ensure that technology benefits society as a whole. Institutional investors and policymakers have a critical role in promoting ethical AI as part of Environmental, Social, and Governance (ESG) initiatives, ensuring that AI is used to advance positive societal outcomes.

Challenges in Developing and Enforcing AI Regulations

Challenges in Developing and Enforcing AI Regulations

Challenges in Developing and Enforcing AI Regulations

One of the significant challenges in regulating AI is keeping pace with its rapid development. AI evolves faster than legislation, making it difficult to craft effective regulations without stifling innovation.

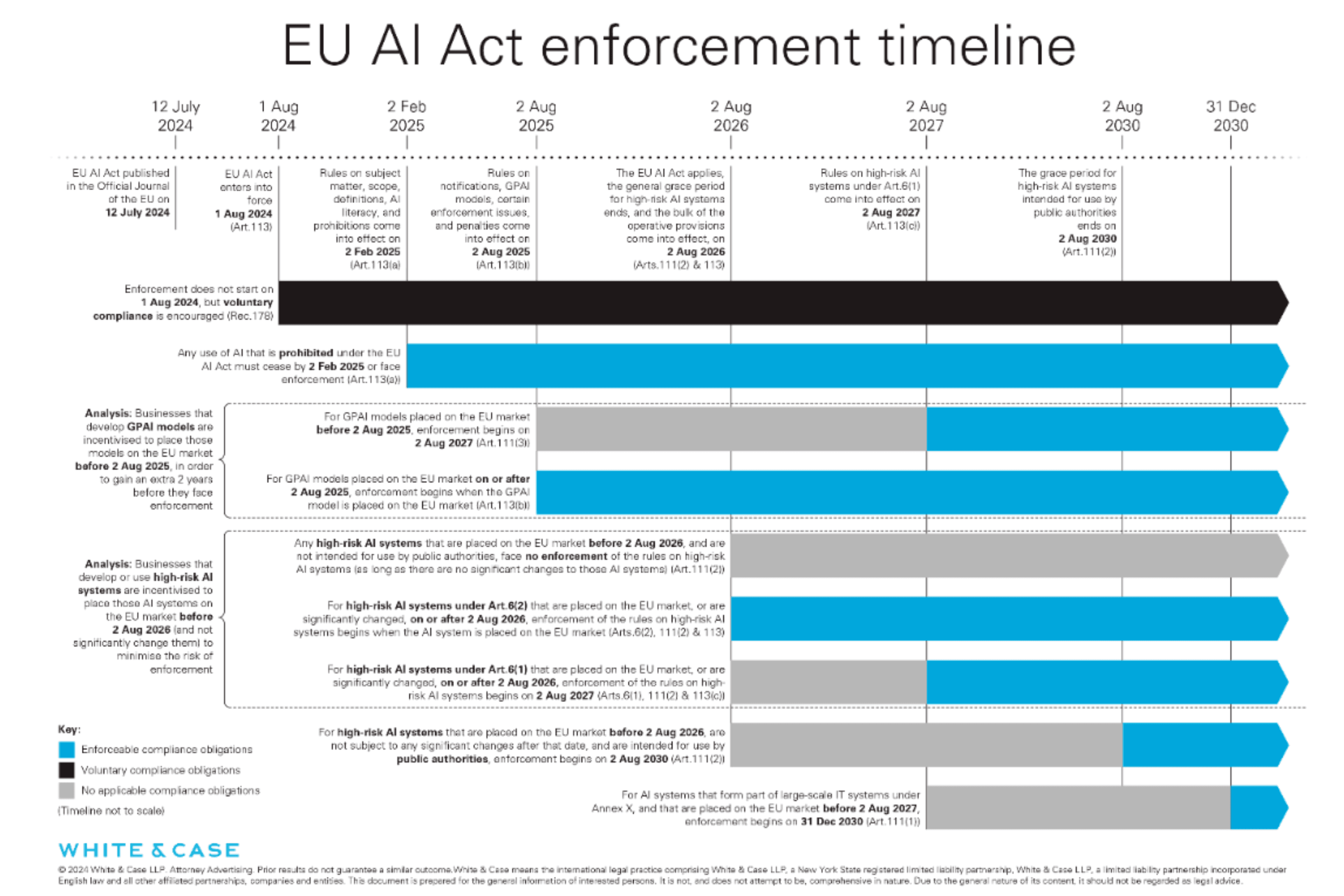

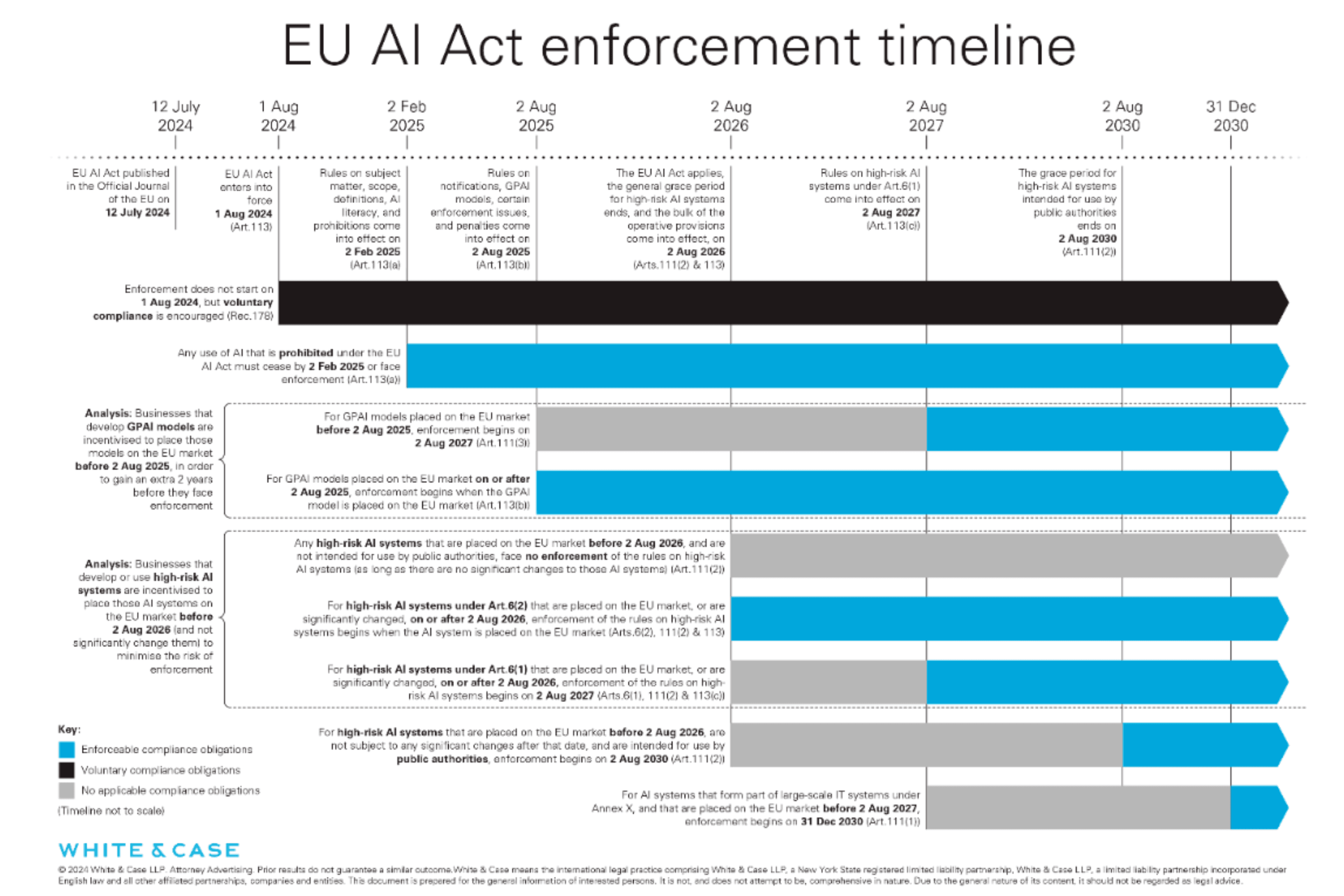

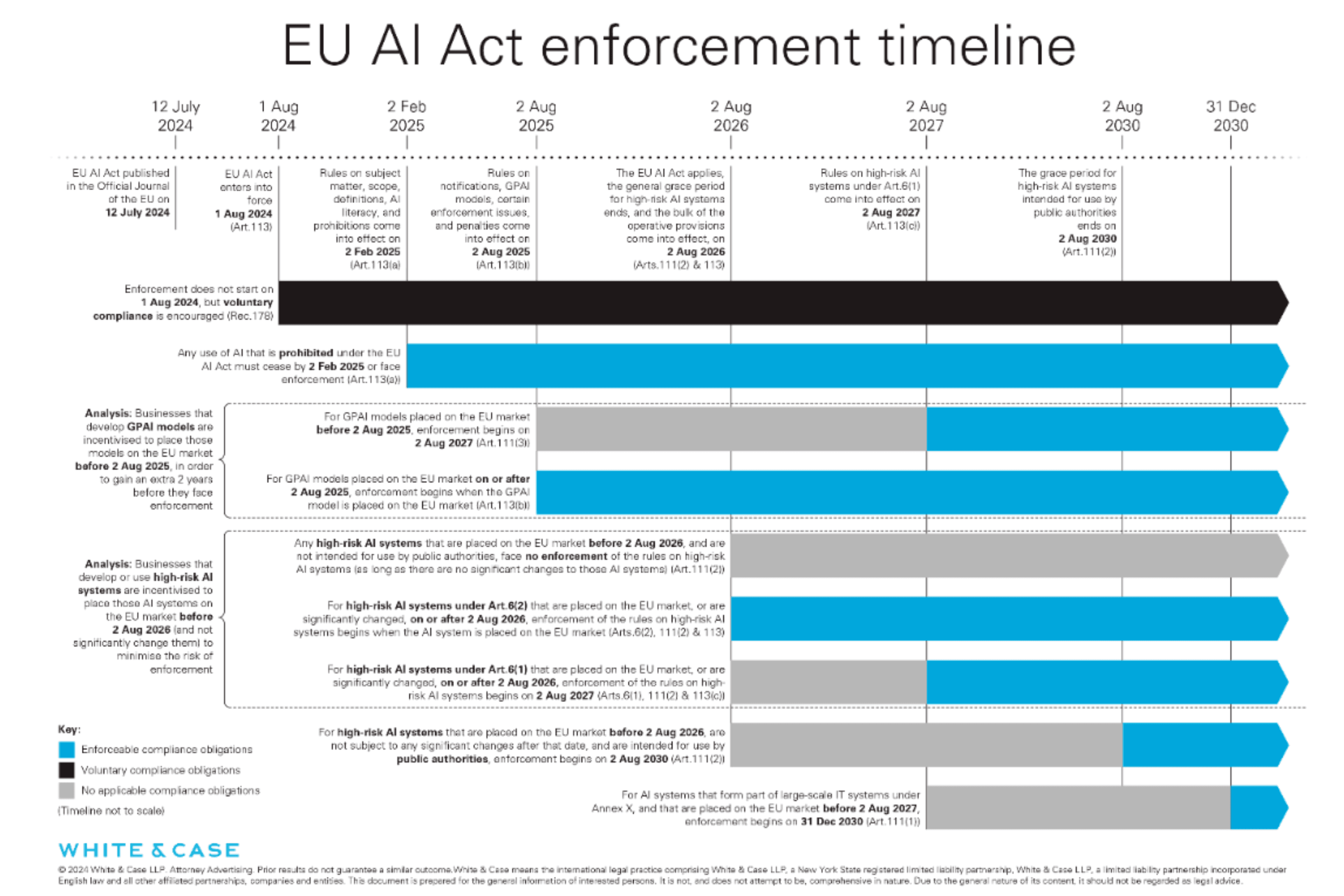

The European Union (EU) is leading the way with its comprehensive AI Act, which classifies AI systems based on their potential risk. Similar efforts are underway in other parts of the world. International organizations like UNESCO advocate for harmonized AI governance frameworks to ensure that AI is regulated fairly and effectively across borders.

Case Study: EU's Artificial Intelligence Act

The EU’s AI Act categorizes AI applications into risk levels, with stricter regulations for high-risk applications, such as those used in critical infrastructure and healthcare. This regulatory framework is seen as a model for other regions, setting a global benchmark for responsible AI governance.

Source: White & Case

One of the significant challenges in regulating AI is keeping pace with its rapid development. AI evolves faster than legislation, making it difficult to craft effective regulations without stifling innovation.

The European Union (EU) is leading the way with its comprehensive AI Act, which classifies AI systems based on their potential risk. Similar efforts are underway in other parts of the world. International organizations like UNESCO advocate for harmonized AI governance frameworks to ensure that AI is regulated fairly and effectively across borders.

Case Study: EU's Artificial Intelligence Act

The EU’s AI Act categorizes AI applications into risk levels, with stricter regulations for high-risk applications, such as those used in critical infrastructure and healthcare. This regulatory framework is seen as a model for other regions, setting a global benchmark for responsible AI governance.

Source: White & Case

One of the significant challenges in regulating AI is keeping pace with its rapid development. AI evolves faster than legislation, making it difficult to craft effective regulations without stifling innovation.

The European Union (EU) is leading the way with its comprehensive AI Act, which classifies AI systems based on their potential risk. Similar efforts are underway in other parts of the world. International organizations like UNESCO advocate for harmonized AI governance frameworks to ensure that AI is regulated fairly and effectively across borders.

Case Study: EU's Artificial Intelligence Act

The EU’s AI Act categorizes AI applications into risk levels, with stricter regulations for high-risk applications, such as those used in critical infrastructure and healthcare. This regulatory framework is seen as a model for other regions, setting a global benchmark for responsible AI governance.

Source: White & Case

How Organizations Can Implement Ethical AI

How Organizations Can Implement Ethical AI

How Organizations Can Implement Ethical AI

“Human-in-the-loop” model

One effective approach is the “human-in-the-loop” model, which ensures human oversight during critical decision-making stages to mitigate potential biases. This approach fosters transparency and trust by ensuring that AI decisions are explainable and accountable.

Human-in-the-loop AI training is divided into three stages:

Data annotation: Human data annotators label the original data, which comprises both input and intended result.

Training: Human machine learning teams use the correctly labelled data to train the system. Based on this information, the algorithm can identify insights, patterns, and links in the dataset. The ultimate goal is for the algorithm to be able to make correct conclusions when confronted with new data.

Testing and Evaluation: At this point, the human's responsibility is to correct any erroneous results produced by the machine. Humans focus on rectifying results when the algorithm is unsure about a decision. This is referred to as active learning.

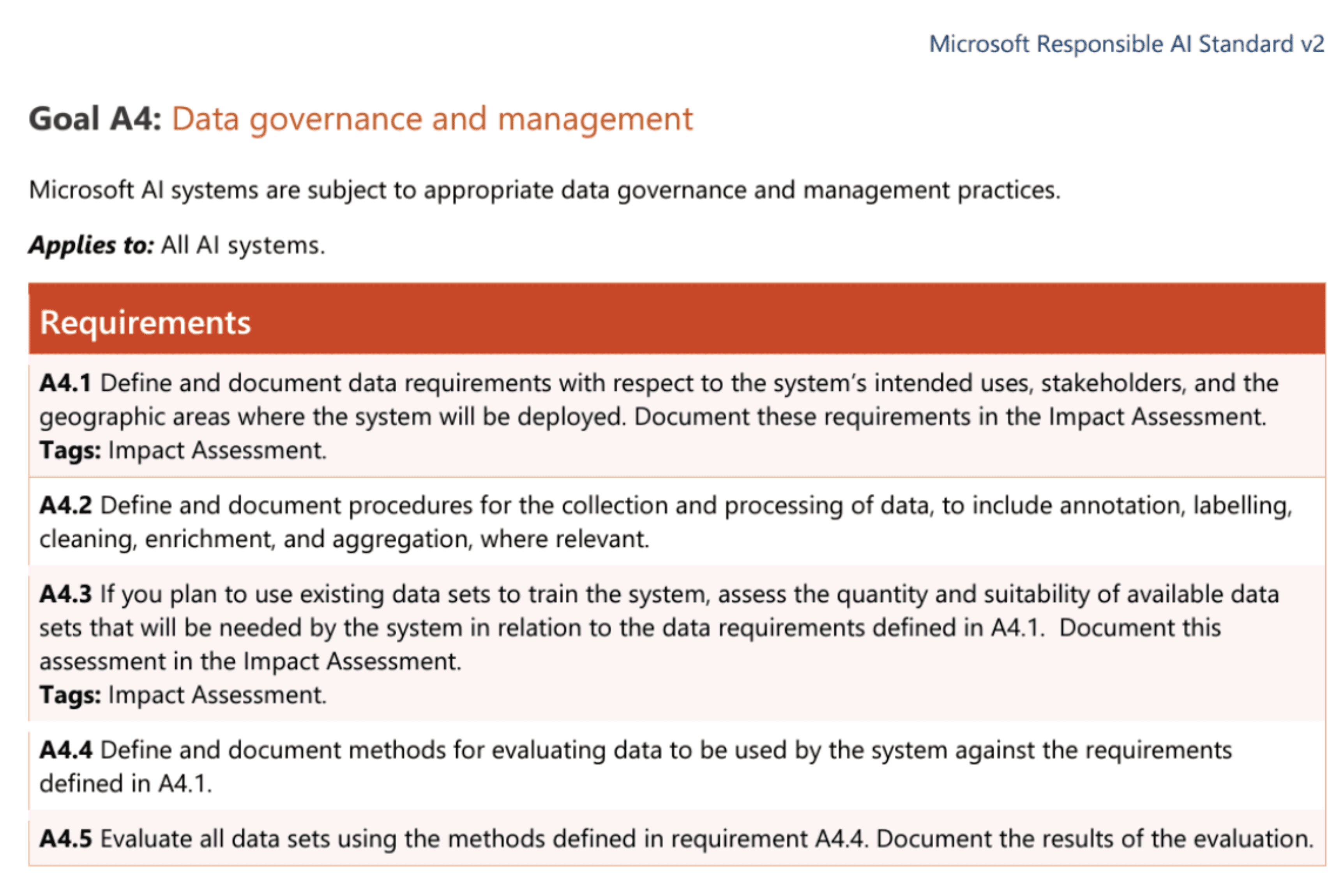

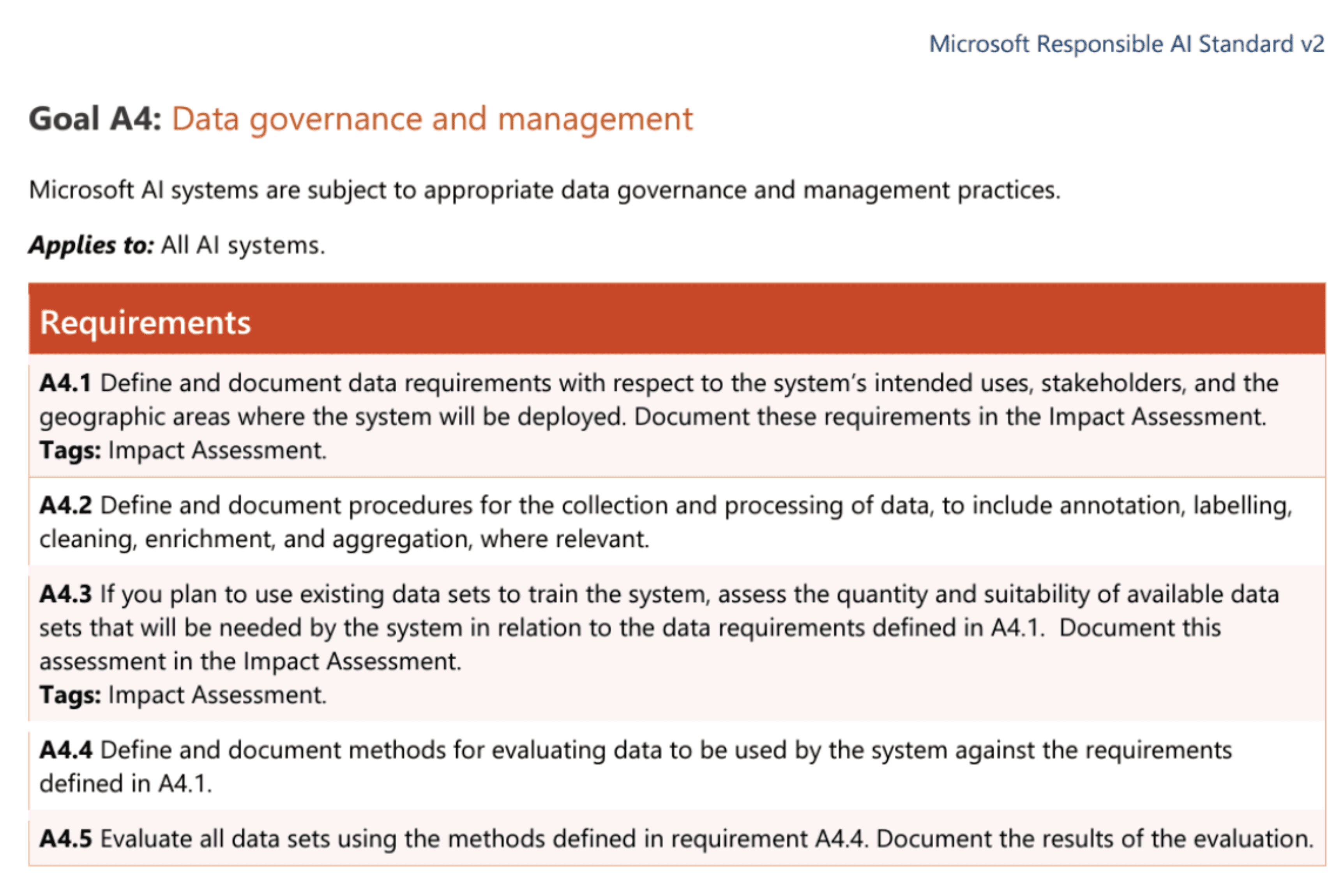

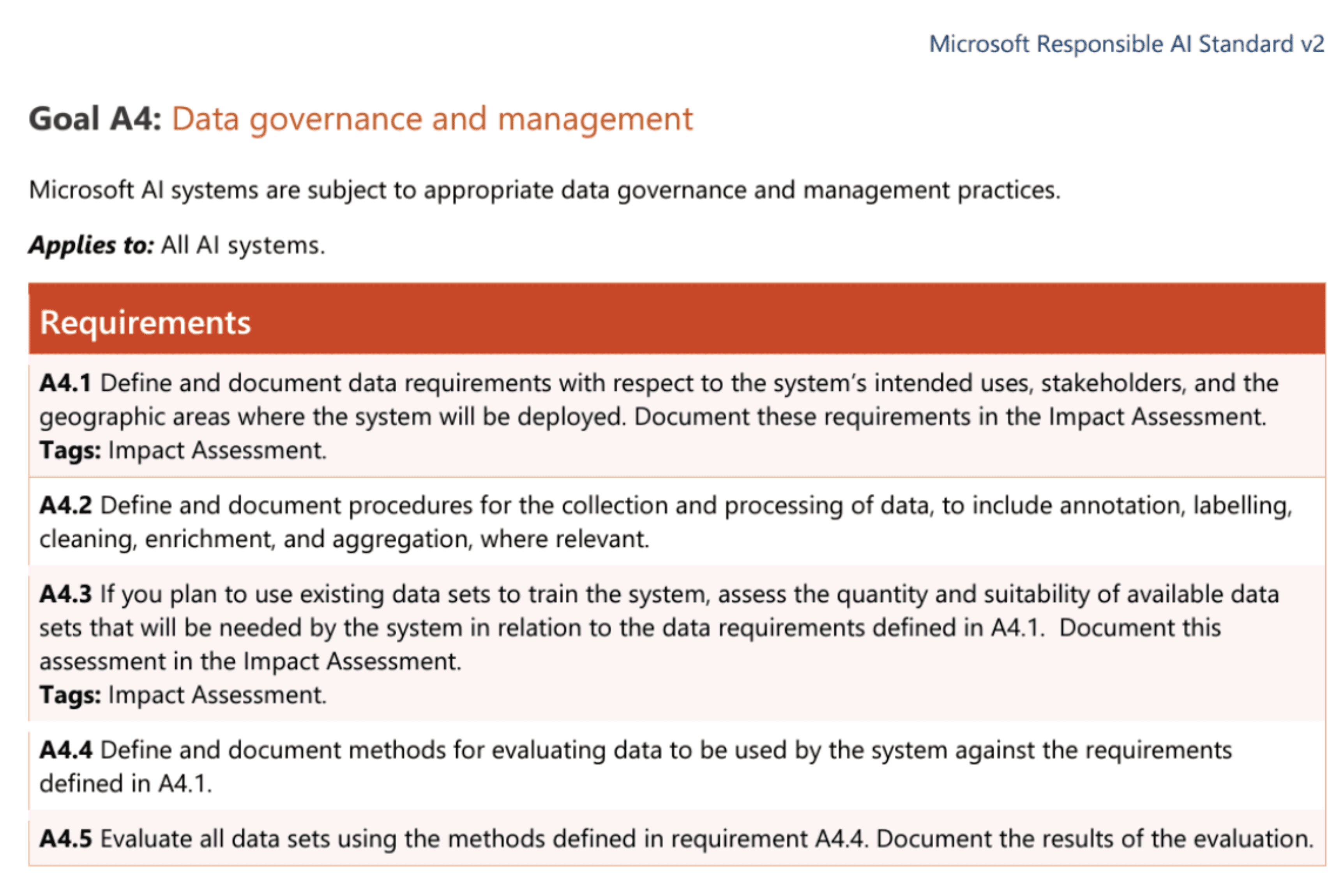

Another best practice involves using frameworks like Microsoft’s responsible AI standards, which help companies align their AI operations with ethical principles such as fairness, privacy, and inclusiveness. These tools allow businesses to identify and mitigate risks, creating AI systems that are reliable and trustworthy.

Case Study: Responsible AI Standards

Microsoft Responsible AI Practices incorporates principles of fairness, transparency, and accountability. By integrating bias detection tools and explainability features into their AI products, Microsoft helps organizations ensure that their AI systems are ethically sound and compliant with evolving regulatory standards.

Source: Microsoft

“Human-in-the-loop” model

One effective approach is the “human-in-the-loop” model, which ensures human oversight during critical decision-making stages to mitigate potential biases. This approach fosters transparency and trust by ensuring that AI decisions are explainable and accountable.

Human-in-the-loop AI training is divided into three stages:

Data annotation: Human data annotators label the original data, which comprises both input and intended result.

Training: Human machine learning teams use the correctly labelled data to train the system. Based on this information, the algorithm can identify insights, patterns, and links in the dataset. The ultimate goal is for the algorithm to be able to make correct conclusions when confronted with new data.

Testing and Evaluation: At this point, the human's responsibility is to correct any erroneous results produced by the machine. Humans focus on rectifying results when the algorithm is unsure about a decision. This is referred to as active learning.

Another best practice involves using frameworks like Microsoft’s responsible AI standards, which help companies align their AI operations with ethical principles such as fairness, privacy, and inclusiveness. These tools allow businesses to identify and mitigate risks, creating AI systems that are reliable and trustworthy.

Case Study: Responsible AI Standards

Microsoft Responsible AI Practices incorporates principles of fairness, transparency, and accountability. By integrating bias detection tools and explainability features into their AI products, Microsoft helps organizations ensure that their AI systems are ethically sound and compliant with evolving regulatory standards.

Source: Microsoft

“Human-in-the-loop” model

One effective approach is the “human-in-the-loop” model, which ensures human oversight during critical decision-making stages to mitigate potential biases. This approach fosters transparency and trust by ensuring that AI decisions are explainable and accountable.

Human-in-the-loop AI training is divided into three stages:

Data annotation: Human data annotators label the original data, which comprises both input and intended result.

Training: Human machine learning teams use the correctly labelled data to train the system. Based on this information, the algorithm can identify insights, patterns, and links in the dataset. The ultimate goal is for the algorithm to be able to make correct conclusions when confronted with new data.

Testing and Evaluation: At this point, the human's responsibility is to correct any erroneous results produced by the machine. Humans focus on rectifying results when the algorithm is unsure about a decision. This is referred to as active learning.

Another best practice involves using frameworks like Microsoft’s responsible AI standards, which help companies align their AI operations with ethical principles such as fairness, privacy, and inclusiveness. These tools allow businesses to identify and mitigate risks, creating AI systems that are reliable and trustworthy.

Case Study: Responsible AI Standards

Microsoft Responsible AI Practices incorporates principles of fairness, transparency, and accountability. By integrating bias detection tools and explainability features into their AI products, Microsoft helps organizations ensure that their AI systems are ethically sound and compliant with evolving regulatory standards.

Source: Microsoft

Governments and international organizations are pivotal in ensuring AI technologies are developed and used responsibly, with regulatory frameworks helping to prevent potential misuse.

Hong Kong stands out as a leader in Asia with its comprehensive AI governance initiatives aimed at protecting personal data privacy and ensuring ethical AI practices.

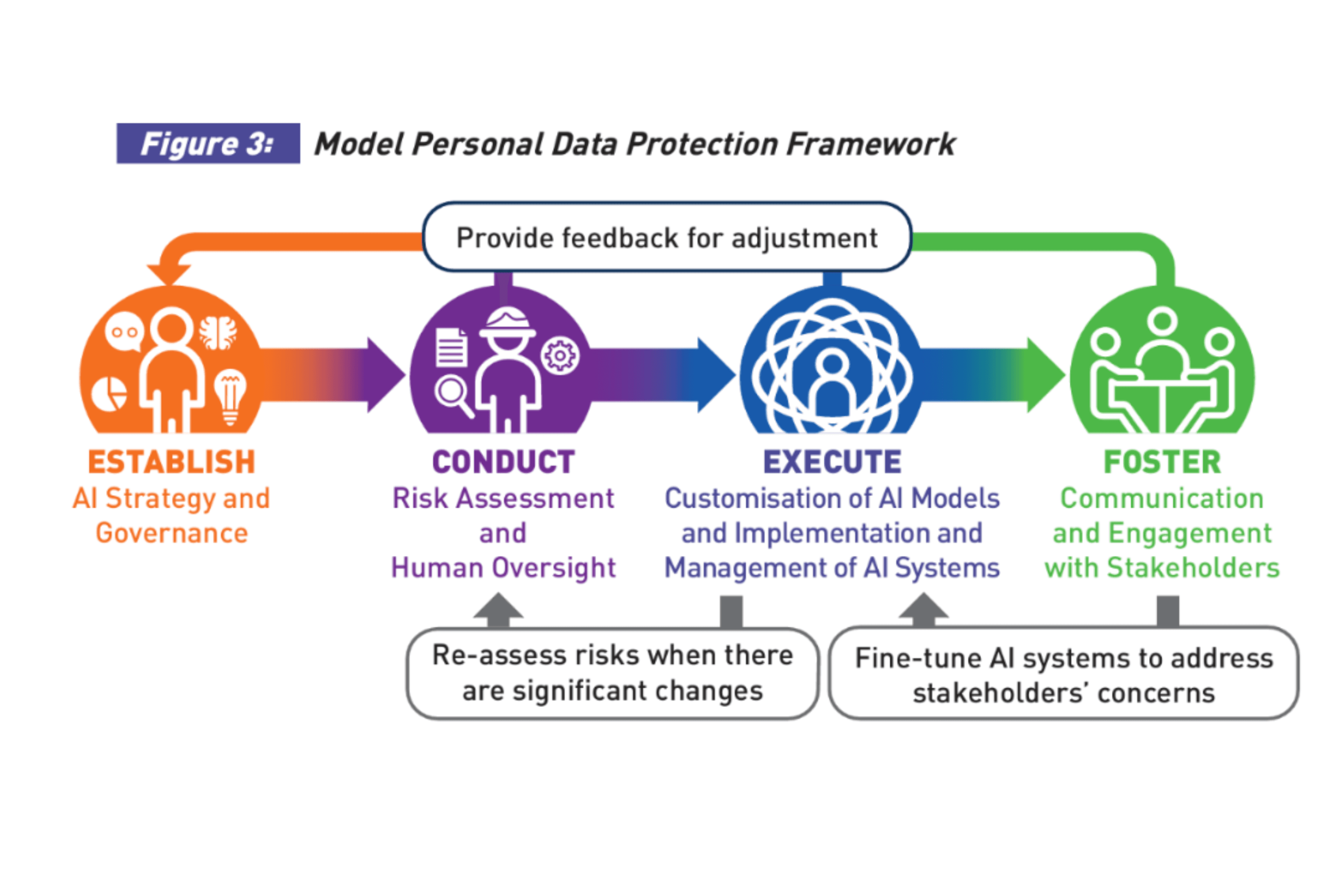

Case Study: Hong Kong's AI Governance Approach

The Artificial Intelligence: Model Personal Data Protection Framework, developed by Hong Kong's Office of the Privacy Commissioner for Personal Data (PCPD), provides organizations with a structured set of best practices to guide the ethical use and development of AI. This framework helps organizations comply with the Personal Data (Privacy) Ordinance (PDPO) and adhere to the principles outlined in the 2021 Guidance on the Ethical Development and Use of Artificial Intelligence. The Model Framework addresses AI governance across four key areas:

Establish AI Strategy and Governance: Organizations are encouraged to formulate an AI strategy and governance policies, including creating an AI governance committee to oversee AI procurement and implementation. This involves employee training on AI to ensure awareness of privacy and ethical considerations.

Conduct Risk Assessment and Human Oversight: A comprehensive risk assessment should be conducted for AI systems, with a "risk-based" management approach. Depending on the risk level, organizations must adopt proportionate risk mitigation measures, including deciding the appropriate level of human oversight.

Customisation of AI Models and System Management: Organizations must prepare and manage data, including personal data, for the customization and implementation of AI systems. This includes testing, validating AI models, and ensuring both system and data security, along with continuous monitoring of AI systems.

Communication and Stakeholder Engagement: Regular and effective communication with stakeholders—including internal teams, AI suppliers, customers, and regulators—is essential. This fosters transparency and helps build trust around AI applications.

This structured approach ensures that organizations in Hong Kong comply with privacy laws and build robust and trustworthy AI systems that align with global ethical standards

Source: PCPD HK

Governments and international organizations are pivotal in ensuring AI technologies are developed and used responsibly, with regulatory frameworks helping to prevent potential misuse.

Hong Kong stands out as a leader in Asia with its comprehensive AI governance initiatives aimed at protecting personal data privacy and ensuring ethical AI practices.

Case Study: Hong Kong's AI Governance Approach

The Artificial Intelligence: Model Personal Data Protection Framework, developed by Hong Kong's Office of the Privacy Commissioner for Personal Data (PCPD), provides organizations with a structured set of best practices to guide the ethical use and development of AI. This framework helps organizations comply with the Personal Data (Privacy) Ordinance (PDPO) and adhere to the principles outlined in the 2021 Guidance on the Ethical Development and Use of Artificial Intelligence. The Model Framework addresses AI governance across four key areas:

Establish AI Strategy and Governance: Organizations are encouraged to formulate an AI strategy and governance policies, including creating an AI governance committee to oversee AI procurement and implementation. This involves employee training on AI to ensure awareness of privacy and ethical considerations.

Conduct Risk Assessment and Human Oversight: A comprehensive risk assessment should be conducted for AI systems, with a "risk-based" management approach. Depending on the risk level, organizations must adopt proportionate risk mitigation measures, including deciding the appropriate level of human oversight.

Customisation of AI Models and System Management: Organizations must prepare and manage data, including personal data, for the customization and implementation of AI systems. This includes testing, validating AI models, and ensuring both system and data security, along with continuous monitoring of AI systems.

Communication and Stakeholder Engagement: Regular and effective communication with stakeholders—including internal teams, AI suppliers, customers, and regulators—is essential. This fosters transparency and helps build trust around AI applications.

This structured approach ensures that organizations in Hong Kong comply with privacy laws and build robust and trustworthy AI systems that align with global ethical standards

Source: PCPD HK

Governments and international organizations are pivotal in ensuring AI technologies are developed and used responsibly, with regulatory frameworks helping to prevent potential misuse.

Hong Kong stands out as a leader in Asia with its comprehensive AI governance initiatives aimed at protecting personal data privacy and ensuring ethical AI practices.

Case Study: Hong Kong's AI Governance Approach

The Artificial Intelligence: Model Personal Data Protection Framework, developed by Hong Kong's Office of the Privacy Commissioner for Personal Data (PCPD), provides organizations with a structured set of best practices to guide the ethical use and development of AI. This framework helps organizations comply with the Personal Data (Privacy) Ordinance (PDPO) and adhere to the principles outlined in the 2021 Guidance on the Ethical Development and Use of Artificial Intelligence. The Model Framework addresses AI governance across four key areas:

Establish AI Strategy and Governance: Organizations are encouraged to formulate an AI strategy and governance policies, including creating an AI governance committee to oversee AI procurement and implementation. This involves employee training on AI to ensure awareness of privacy and ethical considerations.

Conduct Risk Assessment and Human Oversight: A comprehensive risk assessment should be conducted for AI systems, with a "risk-based" management approach. Depending on the risk level, organizations must adopt proportionate risk mitigation measures, including deciding the appropriate level of human oversight.

Customisation of AI Models and System Management: Organizations must prepare and manage data, including personal data, for the customization and implementation of AI systems. This includes testing, validating AI models, and ensuring both system and data security, along with continuous monitoring of AI systems.

Communication and Stakeholder Engagement: Regular and effective communication with stakeholders—including internal teams, AI suppliers, customers, and regulators—is essential. This fosters transparency and helps build trust around AI applications.

This structured approach ensures that organizations in Hong Kong comply with privacy laws and build robust and trustworthy AI systems that align with global ethical standards

Source: PCPD HK

For institutional investors and financial professionals, ethical AI offers significant benefits by reducing legal and reputational risks. Companies that prioritize AI governance are more likely to build sustainable, responsible businesses that appeal to socially conscious investors. Conversely, organizations that fail to implement ethical AI risk falling behind as public scrutiny and regulatory pressures intensify.

Policymakers, too, play a critical role. By encouraging ethical AI practices, they can help prevent societal harms such as deepening inequalities or privacy breaches, while fostering innovation that drives economic growth and public good.

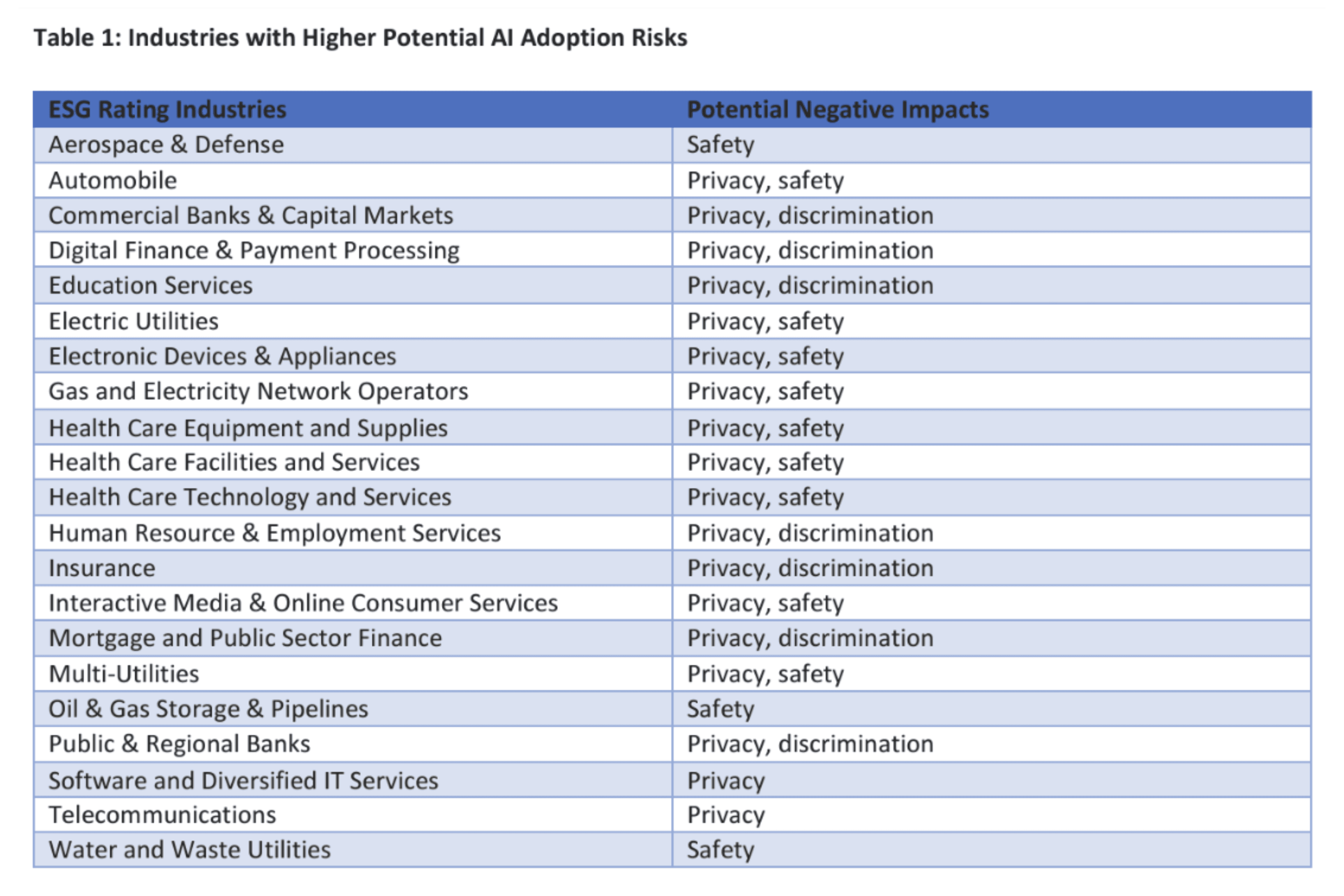

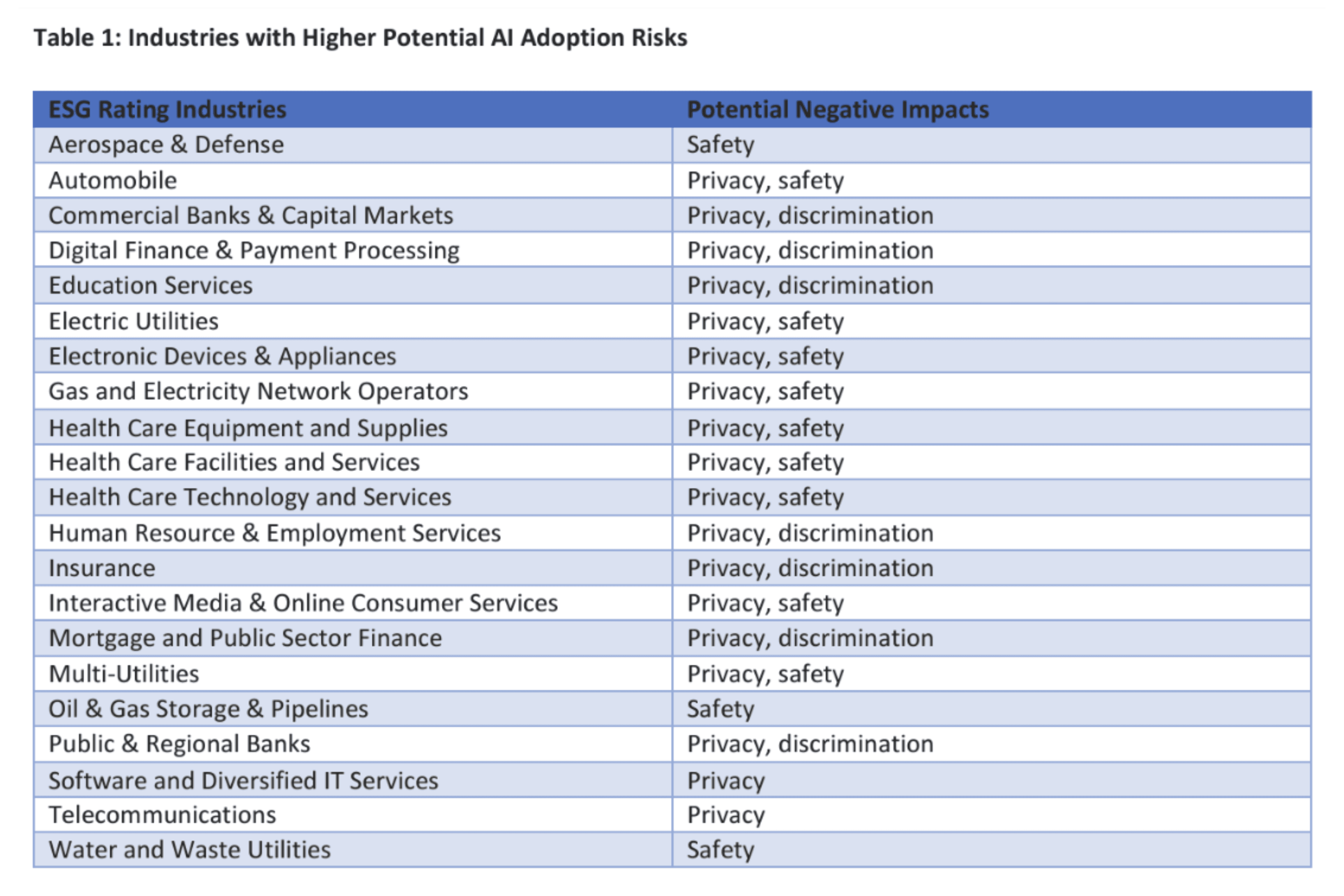

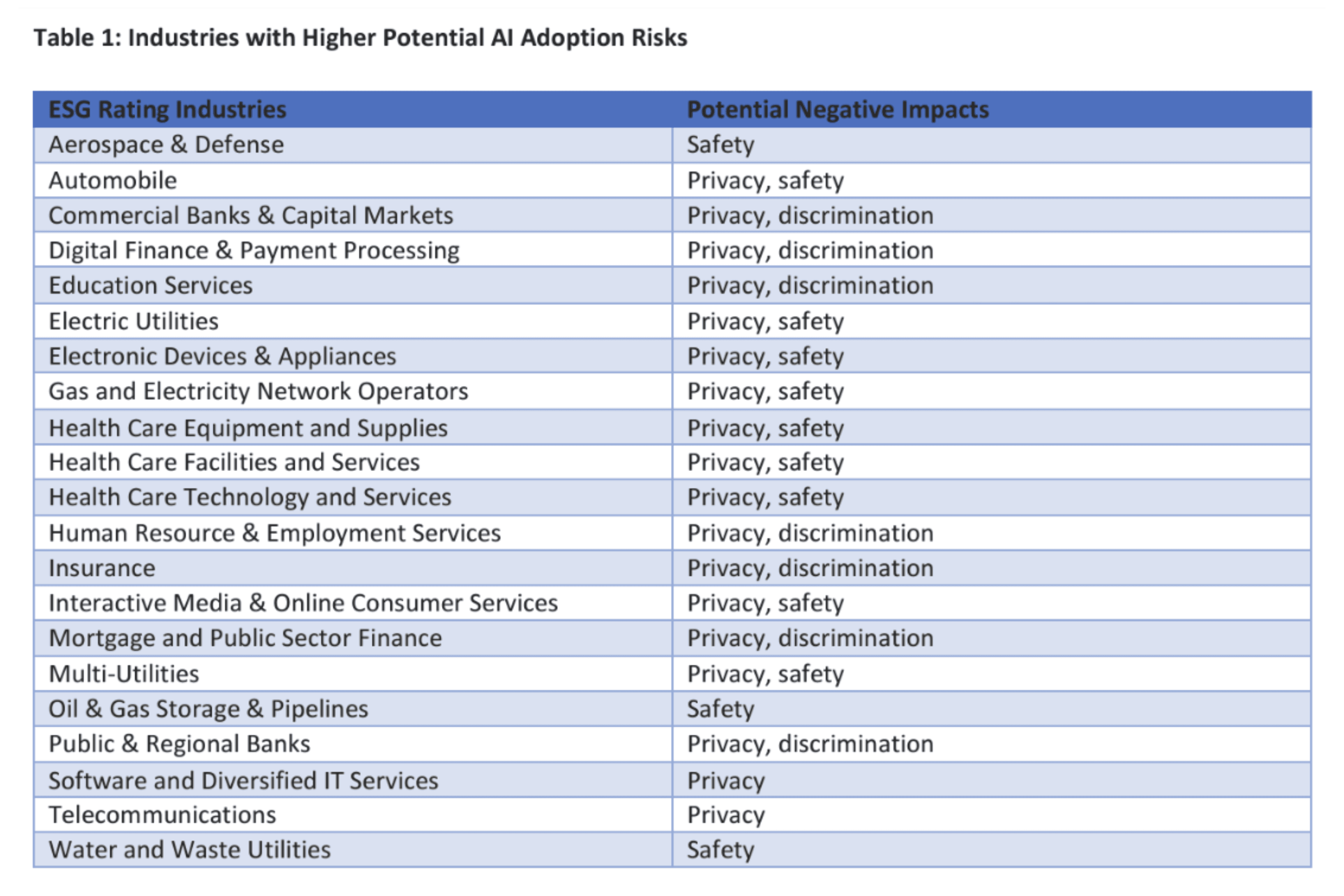

Case Study: ESG Investments in AI

Investors are increasingly incorporating AI governance into their ESG criteria. For example, many venture capital firms now evaluate startups based on their adherence to ethical AI principles, ensuring that AI-driven companies align with societal values. Ethical AI governance is quickly becoming a key factor in attracting investment and fostering long-term success.

Source: ISS ESG

For institutional investors and financial professionals, ethical AI offers significant benefits by reducing legal and reputational risks. Companies that prioritize AI governance are more likely to build sustainable, responsible businesses that appeal to socially conscious investors. Conversely, organizations that fail to implement ethical AI risk falling behind as public scrutiny and regulatory pressures intensify.

Policymakers, too, play a critical role. By encouraging ethical AI practices, they can help prevent societal harms such as deepening inequalities or privacy breaches, while fostering innovation that drives economic growth and public good.

Case Study: ESG Investments in AI

Investors are increasingly incorporating AI governance into their ESG criteria. For example, many venture capital firms now evaluate startups based on their adherence to ethical AI principles, ensuring that AI-driven companies align with societal values. Ethical AI governance is quickly becoming a key factor in attracting investment and fostering long-term success.

Source: ISS ESG

For institutional investors and financial professionals, ethical AI offers significant benefits by reducing legal and reputational risks. Companies that prioritize AI governance are more likely to build sustainable, responsible businesses that appeal to socially conscious investors. Conversely, organizations that fail to implement ethical AI risk falling behind as public scrutiny and regulatory pressures intensify.

Policymakers, too, play a critical role. By encouraging ethical AI practices, they can help prevent societal harms such as deepening inequalities or privacy breaches, while fostering innovation that drives economic growth and public good.

Case Study: ESG Investments in AI

Investors are increasingly incorporating AI governance into their ESG criteria. For example, many venture capital firms now evaluate startups based on their adherence to ethical AI principles, ensuring that AI-driven companies align with societal values. Ethical AI governance is quickly becoming a key factor in attracting investment and fostering long-term success.

Source: ISS ESG

Join us in Hong Kong or online for our full event on 24-25 Oct 2024. Don’t miss our Digital Finance panels on 24 Oct, featuring topics as: “Navigating the Future of AI Driven Financial Ecosystems”. Hear from a world-class panel of experts on how traditional financial institutions and decentralized finance platforms are collaborating to build a unified financial ecosystem in 2025 and beyond.

Join us in Hong Kong or online on October 24-25, 2024:

Secure Your Spot: Register now to attend and gain insights from industry leaders.

Explore Sponsorship: Elevate your brand by becoming a sponsor and showcasing your leadership in financial innovation.

Partner with Us: Partner with us as a supporting organization to influence industry evolution.

Influence the Future

Prepare to contribute your insights and showcase your expertise. Our upcoming feature will enable you to shape global discussions and demonstrate thought leadership.

Sign Up for GDVC Alerts and Exclusive Insights.

*By providing your email address, you agree with our Privacy Policy.

Insights & Resources

Copyright © 2024 Global Digital Visionaries Council. All Rights Reserved.

28 Stanley Street, Central, Hong Kong

Sign Up for GDVC Alerts and Exclusive Insights.

*By providing your email address, you agree with our Privacy Policy.

Insights & Resources

Copyright © 2024 Global Digital Visionaries Council. All Rights Reserved.

28 Stanley Street, Central, Hong Kong

Sign Up for GDVC Alerts and Exclusive Insights.

*By providing your email address, you agree with our Privacy Policy.

Insights

& Resources

Copyright © 2024 Global Digital Visionaries Council. All Rights Reserved.

28 Stanley Street, Central, Hong Kong